Filter

Associated Lab

- Dudman Lab (1) Apply Dudman Lab filter

- Hermundstad Lab (22) Apply Hermundstad Lab filter

- Jayaraman Lab (9) Apply Jayaraman Lab filter

- Looger Lab (1) Apply Looger Lab filter

- Romani Lab (3) Apply Romani Lab filter

- Rubin Lab (2) Apply Rubin Lab filter

- Schreiter Lab (1) Apply Schreiter Lab filter

- Sternson Lab (1) Apply Sternson Lab filter

- Svoboda Lab (1) Apply Svoboda Lab filter

Associated Project Team

Publication Date

Type of Publication

- Remove Janelia filter Janelia

22 Publications

Showing 11-20 of 22 resultsAnimals smelling in the real world use a small number of receptors to sense a vast number of natural molecular mixtures, and proceed to learn arbitrary associations between odors and valences. Here, we propose how the architecture of olfactory circuits leverages disorder, diffuse sensing and redundancy in representation to meet these immense complementary challenges. First, the diffuse and disordered binding of receptors to many molecules compresses a vast but sparsely-structured odor space into a small receptor space, yielding an odor code that preserves similarity in a precise sense. Introducing any order/structure in the sensing degrades similarity preservation. Next, lateral interactions further reduce the correlation present in the low-dimensional receptor code. Finally, expansive disordered projections from the periphery to the central brain reconfigure the densely packed information into a high-dimensional representation, which contains multiple redundant subsets from which downstream neurons can learn flexible associations and valences. Moreover, introducing any order in the expansive projections degrades the ability to recall the learned associations in the presence of noise. We test our theory empirically using data from . Our theory suggests that the neural processing of sparse but high-dimensional olfactory information differs from the other senses in its fundamental use of disorder.

To flexibly navigate, many animals rely on internal spatial representations that persist when the animal is standing still in darkness, and update accurately by integrating the animal's movements in the absence of localizing sensory cues. Theories of mammalian head direction cells have proposed that these dynamics can be realized in a special class of networks that maintain a localized bump of activity via structured recurrent connectivity, and that shift this bump of activity via angular velocity input. Although there are many different variants of these so-called ring attractor networks, they all rely on large numbers of neurons to generate representations that persist in the absence of input and accurately integrate angular velocity input. Surprisingly, in the fly, Drosophila melanogaster, a head direction representation is maintained by a much smaller number of neurons whose dynamics and connectivity resemble those of a ring attractor network. These findings challenge our understanding of ring attractors and their putative implementation in neural circuits. Here, we analyzed failures of angular velocity integration that emerge in small attractor networks with only a few computational units. Motivated by the peak performance of the fly head direction system in darkness, we mathematically derived conditions under which small networks, even with as few as 4 neurons, achieve the performance of much larger networks. The resulting description reveals that by appropriately tuning the network connectivity, the network can maintain persistent representations over the continuum of head directions, and it can accurately integrate angular velocity inputs. We then analytically determined how performance degrades as the connectivity deviates from this optimally-tuned setting, and we find a trade-off between network size and the tuning precision needed to achieve persistence and accurate integration. This work shows how even small networks can accurately track an animal's movements to guide navigation, and it informs our understanding of the functional capabilities of discrete systems more broadly.

To interpret the sensory environment, the brain combines ambiguous sensory measurements with context-specific prior experience. But environmental contexts can change abruptly and unpredictably, resulting in uncertainty about the current context. Here we address two questions: how should context-specific prior knowledge optimally guide the interpretation of sensory stimuli in changing environments, and do human decision-making strategies resemble this optimum? We probe these questions with a task in which subjects report the orientation of ambiguous visual stimuli that were drawn from three dynamically switching distributions, representing different environmental contexts. We derive predictions for an ideal Bayesian observer that leverages the statistical structure of the task to maximize decision accuracy and show that its decisions are biased by task context. The magnitude of this decision bias is not a fixed property of the sensory measurement but depends on the observer's belief about the current context. The model therefore predicts that decision bias will grow with the reliability of the context cue, the stability of the environment, and with the number of trials since the last context switch. Analysis of human choice data validates all three predictions, providing evidence that the brain continuously updates probabilistic representations of the environment to best interpret an uncertain, ever-changing world.

Many animals rely on a representation of head direction for flexible, goal-directed navigation. In insects, a compass-like head direction representation is maintained in a conserved brain region called the central complex. This head direction representation is updated by self-motion information and by tethering to sensory cues in the surroundings through a plasticity mechanism. However, under natural settings, some of these sensory cues may temporarily disappear—for example, when clouds hide the sun—and prominent landmarks at different distances from the insect may move across the animal's field of view during translation, creating potential conflicts for a neural compass. We used two-photon calcium imaging in head-fixed Drosophila behaving in virtual reality to monitor the fly's compass during navigation in immersive naturalistic environments with approachable local landmarks. We found that the fly's compass remains stable even in these settings by tethering to available global cues, likely preserving the animal's ability to perform compass-driven behaviors such as maintaining a constant heading.

Flexible behaviors over long timescales are thought to engage recurrent neural networks in deep brain regions, which are experimentally challenging to study. In insects, recurrent circuit dynamics in a brain region called the central complex (CX) enable directed locomotion, sleep, and context- and experience-dependent spatial navigation. We describe the first complete electron-microscopy-based connectome of the CX, including all its neurons and circuits at synaptic resolution. We identified new CX neuron types, novel sensory and motor pathways, and network motifs that likely enable the CX to extract the fly's head-direction, maintain it with attractor dynamics, and combine it with other sensorimotor information to perform vector-based navigational computations. We also identified numerous pathways that may facilitate the selection of CX-driven behavioral patterns by context and internal state. The CX connectome provides a comprehensive blueprint necessary for a detailed understanding of network dynamics underlying sleep, flexible navigation, and state-dependent action selection.

Identifying coordinated activity within complex systems is essential to linking their structure and function. We study collective activity in networks of pulse-coupled oscillators that have variable network connectivity and integrate-and-fire dynamics. Starting from random initial conditions, we see the emergence of three broad classes of behaviors that differ in their collective spiking statistics. In the first class ("temporally-irregular"), all nodes have variable inter-spike intervals, and the resulting firing patterns are irregular. In the second ("temporally-regular"), the network generates a coherent, repeating pattern of activity in which all nodes fire with the same constant inter-spike interval. In the third ("chimeric"), subgroups of coherently-firing nodes coexist with temporally-irregular nodes. Chimera states have previously been observed in networks of oscillators; here, we find that the notions of temporally-regular and chimeric states encompass a much richer set of dynamical patterns than has yet been described. We also find that degree heterogeneity and connection density have a strong effect on the resulting state: in binomial random networks, high degree variance and intermediate connection density tend to produce temporally-irregular dynamics, while low degree variance and high connection density tend to produce temporally-regular dynamics. Chimera states arise with more frequency in networks with intermediate degree variance and either high or low connection densities. Finally, we demonstrate that a normalized compression distance, computed via the Lempel-Ziv complexity of nodal spike trains, can be used to distinguish these three classes of behavior even when the phase relationship between nodes is arbitrary.

The ability to adapt to changes in stimulus statistics is a hallmark of sensory systems. Here, we developed a theoretical framework that can account for the dynamics of adaptation from an information processing perspective. We use this framework to optimize and analyze adaptive sensory codes, and we show that codes optimized for stationary environments can suffer from prolonged periods of poor performance when the environment changes. To mitigate the adversarial effects of these environmental changes, sensory systems must navigate tradeoffs between the ability to accurately encode incoming stimuli and the ability to rapidly detect and adapt to changes in the distribution of these stimuli. We derive families of codes that balance these objectives, and we demonstrate their close match to experimentally observed neural dynamics during mean and variance adaptation. Our results provide a unifying perspective on adaptation across a range of sensory systems, environments, and sensory tasks.

Hunger and thirst have distinct goals but control similar ingestive behaviors, and little is known about neural processes that are shared between these behavioral states. We identify glutamatergic neurons in the peri-locus coeruleus (periLC neurons) as a polysynaptic convergence node from separate energy-sensitive and hydration-sensitive cell populations. We develop methods for stable hindbrain calcium imaging in free-moving mice, which show that periLC neurons are tuned to ingestive behaviors and respond similarly to food or water consumption. PeriLC neurons are scalably inhibited by palatability and homeostatic need during consumption. Inhibition of periLC neurons is rewarding and increases consumption by enhancing palatability and prolonging ingestion duration. These properties comprise a double-negative feedback relationship that sustains food or water consumption without affecting food- or water-seeking. PeriLC neurons are a hub between hunger and thirst that specifically controls motivation for food and water ingestion, which is a factor that contributes to hedonic overeating and obesity.

Previously, in (Hermundstad et al., 2014), we showed that when sampling is limiting, the efficient coding principle leads to a 'variance is salience' hypothesis, and that this hypothesis accounts for visual sensitivity to binary image statistics. Here, using extensive new psychophysical data and image analysis, we show that this hypothesis accounts for visual sensitivity to a large set of grayscale image statistics at a striking level of detail, and also identify the limits of the prediction. We define a 66-dimensional space of local grayscale light-intensity correlations, and measure the relevance of each direction to natural scenes. The 'variance is salience' hypothesis predicts that two-point correlations are most salient, and predicts their relative salience. We tested these predictions in a texture-segregation task using un-natural, synthetic textures. As predicted, correlations beyond second order are not salient, and predicted thresholds for over 300 second-order correlations match psychophysical thresholds closely (median fractional error < 0:13).

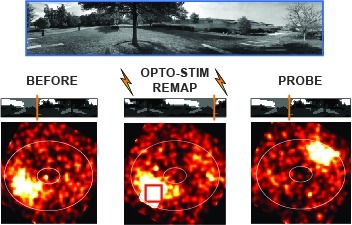

Many animals rely on an internal heading representation when navigating in varied environments. How this representation is linked to the sensory cues that define different surroundings is unclear. In the fly brain, heading is represented by 'compass' neurons that innervate a ring-shaped structure known as the ellipsoid body. Each compass neuron receives inputs from 'ring' neurons that are selective for particular visual features; this combination provides an ideal substrate for the extraction of directional information from a visual scene. Here we combine two-photon calcium imaging and optogenetics in tethered flying flies with circuit modelling, and show how the correlated activity of compass and visual neurons drives plasticity, which flexibly transforms two-dimensional visual cues into a stable heading representation. We also describe how this plasticity enables the fly to convert a partial heading representation, established from orienting within part of a novel setting, into a complete heading representation. Our results provide mechanistic insight into the memory-related computations that are essential for flexible navigation in varied surroundings.