Main Menu (Mobile)- Block

- Overview

-

Support Teams

- Overview

- Anatomy and Histology

- Cryo-Electron Microscopy

- Electron Microscopy

- Flow Cytometry

- Gene Targeting and Transgenics

- High Performance Computing

- Immortalized Cell Line Culture

- Integrative Imaging

- Invertebrate Shared Resource

- Janelia Experimental Technology

- Mass Spectrometry

- Media Prep

- Molecular Genomics

- Stem Cell & Primary Culture

- Project Pipeline Support

- Project Technical Resources

- Quantitative Genomics

- Scientific Computing

- Viral Tools

- Vivarium

- Open Science

- You + Janelia

- About Us

Labs:

Project Teams:

Main Menu - Block

Labs:

Project Teams:

- Overview

- Anatomy and Histology

- Cryo-Electron Microscopy

- Electron Microscopy

- Flow Cytometry

- Gene Targeting and Transgenics

- High Performance Computing

- Immortalized Cell Line Culture

- Integrative Imaging

- Invertebrate Shared Resource

- Janelia Experimental Technology

- Mass Spectrometry

- Media Prep

- Molecular Genomics

- Stem Cell & Primary Culture

- Project Pipeline Support

- Project Technical Resources

- Quantitative Genomics

- Scientific Computing

- Viral Tools

- Vivarium

node:field_image_thumbnail | entity_field

custom_misc-custom_misc_featured_summary | block

Stringer Lab /

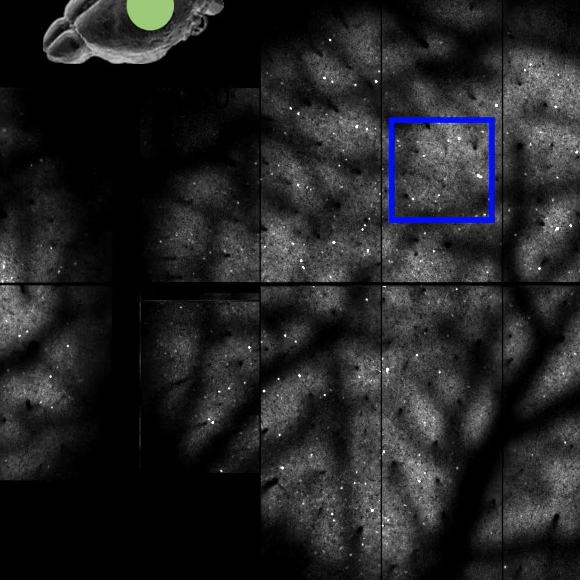

Our lab combines machine learning / AI techniques and large-scale imaging to investigate plasticity rules and sensory representations in cortical circuits. Lab page: mouseland.github.io

janelia7_blocks-janelia7_secondary_menu | block

More in this Lab Landing Page

custom_misc-custom_misc_lab_updates | block

node:field_content_header | entity_field

Current Research

node:field_content_summary | entity_field

We are constantly bombarded with sensory information, and our brains have to quickly parse this information to determine the relevant sensory features to decide our motor actions. Picking up a coffee mug or catching a ball requires complex visual processing to guide the given motor action. To determine how neurons work together to perform such tasks, we acquire large-scale recordings of 50,000+ neurons, and analyze these recordings using machine learning.

node:body | entity_field