Filter

Associated Lab

- Ahrens Lab (1) Apply Ahrens Lab filter

- Aso Lab (3) Apply Aso Lab filter

- Remove Branson Lab filter Branson Lab

- Card Lab (3) Apply Card Lab filter

- Cardona Lab (1) Apply Cardona Lab filter

- Dickson Lab (1) Apply Dickson Lab filter

- Fetter Lab (1) Apply Fetter Lab filter

- Freeman Lab (2) Apply Freeman Lab filter

- Harris Lab (1) Apply Harris Lab filter

- Heberlein Lab (1) Apply Heberlein Lab filter

- Karpova Lab (1) Apply Karpova Lab filter

- Keller Lab (3) Apply Keller Lab filter

- Otopalik Lab (1) Apply Otopalik Lab filter

- Reiser Lab (3) Apply Reiser Lab filter

- Rubin Lab (7) Apply Rubin Lab filter

- Simpson Lab (1) Apply Simpson Lab filter

- Svoboda Lab (1) Apply Svoboda Lab filter

- Tervo Lab (1) Apply Tervo Lab filter

- Truman Lab (1) Apply Truman Lab filter

- Turaga Lab (4) Apply Turaga Lab filter

- Zlatic Lab (1) Apply Zlatic Lab filter

Associated Project Team

Associated Support Team

- Anatomy and Histology (1) Apply Anatomy and Histology filter

- Fly Facility (1) Apply Fly Facility filter

- Janelia Experimental Technology (1) Apply Janelia Experimental Technology filter

- Project Technical Resources (4) Apply Project Technical Resources filter

- Quantitative Genomics (1) Apply Quantitative Genomics filter

- Scientific Computing Software (3) Apply Scientific Computing Software filter

- Scientific Computing Systems (1) Apply Scientific Computing Systems filter

Publication Date

45 Janelia Publications

Showing 41-45 of 45 resultsAn approaching predator and self-motion toward an object can generate similar looming patterns on the retina, but these situations demand different rapid responses. How central circuits flexibly process visual cues to activate appropriate, fast motor pathways remains unclear. Here we identify two descending neuron (DN) types that control landing and contribute to visuomotor flexibility in Drosophila. For each, silencing impairs visually evoked landing, activation drives landing, and spike rate determines leg extension amplitude. Critically, visual responses of both DNs are severely attenuated during non-flight periods, effectively decoupling visual stimuli from the landing motor pathway when landing is inappropriate. The flight-dependence mechanism differs between DN types. Octopamine exposure mimics flight effects in one, whereas the other probably receives neuronal feedback from flight motor circuits. Thus, this sensorimotor flexibility arises from distinct mechanisms for gating action-specific descending pathways, such that sensory and motor networks are coupled or decoupled according to the behavioral state.

Kernel regression or classification (also referred to as weighted ε-NN methods in Machine Learning) are appealing for their simplicity and therefore ubiquitous in data analysis. How- ever, practical implementations of kernel regression or classification consist of quantizing or sub-sampling data for improving time efficiency, often at the cost of prediction quality. While such tradeoffs are necessary in practice, their statistical implications are generally not well understood, hence practical implementations come with few performance guaran- tees. In particular, it is unclear whether it is possible to maintain the statistical accuracy of kernel prediction—crucial in some applications—while improving prediction time. The present work provides guiding principles for combining kernel prediction with data- quantization so as to guarantee good tradeoffs between prediction time and accuracy, and in particular so as to approximately maintain the good accuracy of vanilla kernel prediction. Furthermore, our tradeoff guarantees are worked out explicitly in terms of a tuning parameter which acts as a knob that favors either time or accuracy depending on practical needs. On one end of the knob, prediction time is of the same order as that of single-nearest- neighbor prediction (which is statistically inconsistent) while maintaining consistency; on the other end of the knob, the prediction risk is nearly minimax-optimal (in terms of the original data size) while still reducing time complexity. The analysis thus reveals the interaction between the data-quantization approach and the kernel prediction method, and most importantly gives explicit control of the tradeoff to the practitioner rather than fixing the tradeoff in advance or leaving it opaque. The theoretical results are validated on data from a range of real-world application domains; in particular we demonstrate that the theoretical knob performs as expected.

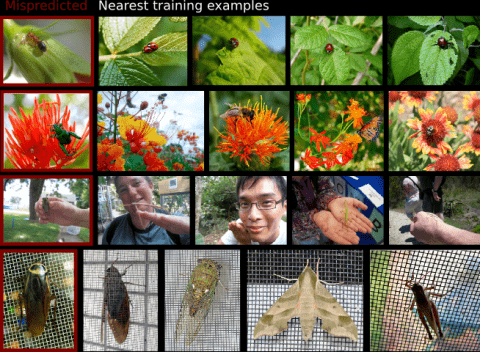

Modern supervised learning algorithms can learn very accurate and complex discriminating functions. But when these classifiers fail, this complexity can also be a drawback because there is no easy, intuitive way to diagnose why they are failing and remedy the problem. This important question has received little attention. To address this problem, we propose a novel method to analyze and understand a classifier's errors. Our method centers around a measure of how much influence a training example has on the classifier's prediction for a test example. To understand why a classifier is mispredicting the label of a given test example, the user can find and review the most influential training examples that caused this misprediction, allowing them to focus their attention on relevant areas of the data space. This will aid the user in determining if and how the training data is inconsistently labeled or lacking in diversity, or if the feature representation is insufficient. As computing the influence of each training example is computationally impractical, we propose a novel distance metric to approximate influence for boosting classifiers that is fast enough to be used interactively. We also show several novel use paradigms of our distance metric. Through experiments, we show that it can be used to find incorrectly or inconsistently labeled training examples, to find specific areas of the data space that need more training data, and to gain insight into which features are missing from the current representation. Code is available at https://github.com/kristinbranson/InfluentialNeighbors.

The body of an animal determines how the nervous system produces behavior. Therefore, detailed modeling of the neural control of sensorimotor behavior requires a detailed model of the body. Here we contribute an anatomically-detailed biomechanical whole-body model of the fruit fly Drosophila melanogaster in the MuJoCo physics engine. Our model is general-purpose, enabling the simulation of diverse fly behaviors, both on land and in the air. We demonstrate the generality of our model by simulating realistic locomotion, both flight and walking. To support these behaviors, we have extended MuJoCo with phenomenological models of fluid forces and adhesion forces. Through data-driven end-to-end reinforcement learning, we demonstrate that these advances enable the training of neural network controllers capable of realistic locomotion along complex trajectories based on high-level steering control signals. With a visually guided flight task, we demonstrate a neural controller that can use the vision sensors of the body model to control and steer flight. Our project is an open-source platform for modeling neural control of sensorimotor behavior in an embodied context.Competing Interest StatementThe authors have declared no competing interest.

Understanding how the brain works in tight concert with the rest of the central nervous system (CNS) hinges upon knowledge of coordinated activity patterns across the whole CNS. We present a method for measuring activity in an entire, non-transparent CNS with high spatiotemporal resolution. We combine a light-sheet microscope capable of simultaneous multi-view imaging at volumetric speeds 25-fold faster than the state-of-the-art, a whole-CNS imaging assay for the isolated Drosophila larval CNS and a computational framework for analysing multi-view, whole-CNS calcium imaging data. We image both brain and ventral nerve cord, covering the entire CNS at 2 or 5 Hz with two- or one-photon excitation, respectively. By mapping network activity during fictive behaviours and quantitatively comparing high-resolution whole-CNS activity maps across individuals, we predict functional connections between CNS regions and reveal neurons in the brain that identify type and temporal state of motor programs executed in the ventral nerve cord.