Select Publications

View All PublicationsAssigning behavioral functions to neural structures has long been a central goal in neuroscience and is a necessary first step toward a circuit-level understanding of how the brain generates behavior. Here, we map the neural substrates of locomotion and social behaviors for Drosophila melanogaster using automated machine-vision and machine-learning techniques. From videos of 400,000 flies, we quantified the behavioral effects of activating 2,204 genetically targeted populations of neurons. We combined a novel quantification of anatomy with our behavioral analysis to create brain-behavior correlation maps, which are shared as browsable web pages and interactive software. Based on these maps, we generated hypotheses of regions of the brain causally related to sensory processing, locomotor control, courtship, aggression, and sleep. Our maps directly specify genetic tools to target these regions, which we used to identify a small population of neurons with a role in the control of walking.

•We developed machine-vision methods to broadly and precisely quantify fly behavior•We measured effects of activating 2,204 genetically targeted neuronal populations•We created whole-brain maps of neural substrates of locomotor and social behaviors•We created resources for exploring our results and enabling further investigation

Machine-vision analyses of large behavior and neuroanatomy data reveal whole-brain maps of regions associated with numerous complex behaviors.

View Publication PageWe present a machine learning–based system for automatically computing interpretable, quantitative measures of animal behavior. Through our interactive system, users encode their intuition about behavior by annotating a small set of video frames. These manual labels are converted into classifiers that can automatically annotate behaviors in screen-scale data sets. Our general-purpose system can create a variety of accurate individual and social behavior classifiers for different organisms, including mice and adult and larval Drosophila.

View Publication PageWe propose a framework for detecting action patterns from motion sequences and modeling the sensory-motor relationship of animals, using a generative recurrent neural network. The network has a discriminative part (classifying actions) and a generative part (predicting motion), whose recurrent cells are laterally connected, allowing higher levels of the network to represent high level phenomena. We test our framework on two types of data, fruit fly behavior and online handwriting. Our results show that 1) taking advantage of unlabeled sequences, by predicting future motion, significantly improves action detection performance when training labels are scarce, 2) the network learns to represent high level phenomena such as writer identity and fly gender, without supervision, and 3) simulated motion trajectories, generated by treating motion prediction as input to the network, look realistic and may be used to qualitatively evaluate whether the model has learnt generative control rules.

View Publication PageIn this work, we address the problem of precisely localizing key frames of an action, for example, the precise time that a pitcher releases a baseball, or the precise time that a crowd begins to applaud. Key frame localization is a largely overlooked and important action-recognition problem, for example in the field of neuroscience, in which we would like to understand the neural activity that produces the start of a bout of an action. To address this problem, we introduce a novel structured loss function that properly weights the types of errors that matter in such applications: it more heavily penalizes extra and missed action start detections over small misalignments. Our structured loss is based on the best matching between predicted and labeled action starts. We train recurrent neural networks (RNNs) to minimize differentiable approximations of this loss. To evaluate these methods, we introduce the Mouse Reach Dataset, a large, annotated video dataset of mice performing a sequence of actions. The dataset was collected and labeled by experts for the purpose of neuroscience research. On this dataset, we demonstrate that our method outperforms related approaches and baseline methods using an unstructured loss.

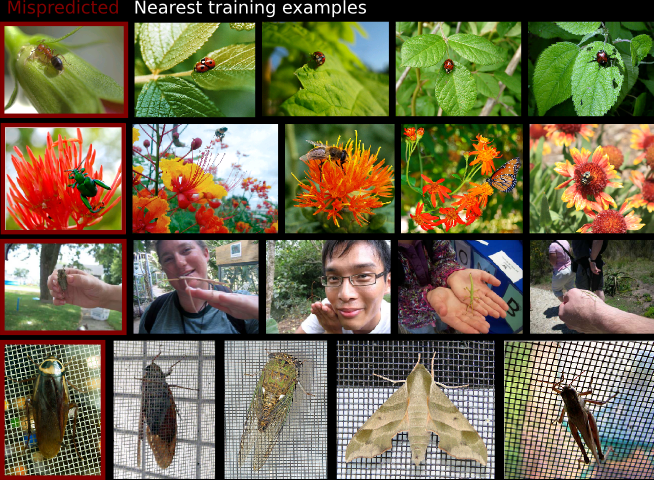

View Publication PageModern supervised learning algorithms can learn very accurate and complex discriminating functions. But when these classifiers fail, this complexity can also be a drawback because there is no easy, intuitive way to diagnose why they are failing and remedy the problem. This important question has received little attention. To address this problem, we propose a novel method to analyze and understand a classifier's errors. Our method centers around a measure of how much influence a training example has on the classifier's prediction for a test example. To understand why a classifier is mispredicting the label of a given test example, the user can find and review the most influential training examples that caused this misprediction, allowing them to focus their attention on relevant areas of the data space. This will aid the user in determining if and how the training data is inconsistently labeled or lacking in diversity, or if the feature representation is insufficient. As computing the influence of each training example is computationally impractical, we propose a novel distance metric to approximate influence for boosting classifiers that is fast enough to be used interactively. We also show several novel use paradigms of our distance metric. Through experiments, we show that it can be used to find incorrectly or inconsistently labeled training examples, to find specific areas of the data space that need more training data, and to gain insight into which features are missing from the current representation.

Code is available at https://github.com/kristinbranson/InfluentialNeighbors.

View Publication PageMetric learning seeks a transformation of the feature space that enhances prediction quality for a given task. In this work we provide PAC-style sample complexity rates for supervised metric learning. We give matching lower- and upper-bounds showing that sample complexity scales with the representation dimension when no assumptions are made about the underlying data distribution. In addition, by leveraging the structure of the data distribution, we provide rates fine-tuned to a specific notion of the intrinsic complexity of a given dataset, allowing us to relax the dependence on representation dimension. We show both theoretically and empirically that augmenting the metric learning optimization criterion with a simple norm-based regularization is important and can help adapt to a dataset’s intrinsic complexity yielding better generalization, thus partly explaining the empirical success of similar regularizations reported in previous works.

View Publication PageIn this work, we address the problem of pose detection and tracking of multiple individuals for the study of behaviour in insects and animals. Using a Deep Neural Network architecture, precise detection and association of the body parts can be performed. The models are learned based on user-annotated training videos, which gives flexibility to the approach. This is illustrated on two different animals: honeybees and mice, where very good performance in part recognition and association are observed despite the presence of multiple interacting individuals.

View Publication PageWe present a camera-based method for automatically quantifying the individual and social behaviors of fruit flies, Drosophila melanogaster, interacting in a planar arena. Our system includes machine-vision algorithms that accurately track many individuals without swapping identities and classification algorithms that detect behaviors. The data may be represented as an ethogram that plots the time course of behaviors exhibited by each fly or as a vector that concisely captures the statistical properties of all behaviors displayed in a given period. We found that behavioral differences between individuals were consistent over time and were sufficient to accurately predict gender and genotype. In addition, we found that the relative positions of flies during social interactions vary according to gender, genotype and social environment. We expect that our software, which permits high-throughput screening, will complement existing molecular methods available in Drosophila, facilitating new investigations into the genetic and cellular basis of behavior.

View Publication PageIn this review, we discuss the emerging field of computational behavioral analysis-the use of modern methods from computer science and engineering to quantitatively measure animal behavior. We discuss aspects of experiment design important to both obtaining biologically relevant behavioral data and enabling the use of machine vision and learning techniques for automation. These two goals are often in conflict. Restraining or restricting the environment of the animal can simplify automatic behavior quantification, but it can also degrade the quality or alter important aspects of behavior. To enable biologists to design experiments to obtain better behavioral measurements, and computer scientists to pinpoint fruitful directions for algorithm improvement, we review known effects of artificial manipulation of the animal on behavior. We also review machine vision and learning techniques for tracking, feature extraction, automated behavior classification, and automated behavior discovery, the assumptions they make, and the types of data they work best with. Expected final online publication date for the Annual Review of Neuroscience Volume 39 is July 08, 2016. Please see http://www.annualreviews.org/catalog/pubdates.aspx for revised estimates.

View Publication PageRecent developments in machine vision methods for automatic, quantitative analysis of social behavior have immensely improved both the scale and level of resolution with which we can dissect interactions between members of the same species. In this paper, we review these methods, with a particular focus on how biologists can apply them to their own work. We discuss several components of machine vision-based analyses: methods to record high-quality video for automated analyses, video-based tracking algorithms for estimating the positions of interacting animals, and machine learning methods for recognizing patterns of interactions. These methods are extremely general in their applicability, and we review a subset of successful applications of them to biological questions in several model systems with very different types of social behaviors.

View Publication PageThe mouse embryo has long been central to the study of mammalian development; however, elucidating the cell behaviors governing gastrulation and the formation of tissues and organs remains a fundamental challenge. A major obstacle is the lack of live imaging and image analysis technologies capable of systematically following cellular dynamics across the developing embryo. We developed a light-sheet microscope that adapts itself to the dramatic changes in size, shape, and optical properties of the post-implantation mouse embryo and captures its development from gastrulation to early organogenesis at the cellular level. We furthermore developed a computational framework for reconstructing long-term cell tracks, cell divisions, dynamic fate maps, and maps of tissue morphogenesis across the entire embryo. By jointly analyzing cellular dynamics in multiple embryos registered in space and time, we built a dynamic atlas of post-implantation mouse development that, together with our microscopy and computational methods, is provided as a resource.

View Publication PageAggressive social interactions are used to compete for limited resources and are regulated by complex sensory cues and the organism's internal state. While both sexes exhibit aggression, its neuronal underpinnings are understudied in females. Here, we identify a population of sexually dimorphic aIPg neurons in the adult central brain whose optogenetic activation increased, and genetic inactivation reduced, female aggression. Analysis of GAL4 lines identified in an unbiased screen for increased female chasing behavior revealed the involvement of another sexually dimorphic neuron, pC1d, and implicated aIPg and pC1d neurons as core nodes regulating female aggression. Connectomic analysis demonstrated that aIPg neurons and pC1d are interconnected and suggest that aIPg neurons may exert part of their effect by gating the flow of visual information to descending neurons. Our work reveals important regulatory components of the neuronal circuitry that underlies female aggressive social interactions and provides tools for their manipulation.

View Publication PageThe motor cortex controls skilled arm movement by sending temporal patterns of activity to lower motor centres. Local cortical dynamics are thought to shape these patterns throughout movement execution. External inputs have been implicated in setting the initial state of the motor cortex, but they may also have a pattern-generating role. Here we dissect the contribution of local dynamics and inputs to cortical pattern generation during a prehension task in mice. Perturbing cortex to an aberrant state prevented movement initiation, but after the perturbation was released, cortex either bypassed the normal initial state and immediately generated the pattern that controls reaching or failed to generate this pattern. The difference in these two outcomes was probably a result of external inputs. We directly investigated the role of inputs by inactivating the thalamus; this perturbed cortical activity and disrupted limb kinematics at any stage of the movement. Activation of thalamocortical axon terminals at different frequencies disrupted cortical activity and arm movement in a graded manner. Simultaneous recordings revealed that both thalamic activity and the current state of cortex predicted changes in cortical activity. Thus, the pattern generator for dexterous arm movement is distributed across multiple, strongly interacting brain regions.

View Publication PageThe comprehensive reconstruction of cell lineages in complex multicellular organisms is a central goal of developmental biology. We present an open-source computational framework for the segmentation and tracking of cell nuclei with high accuracy and speed. We demonstrate its (i) generality by reconstructing cell lineages in four-dimensional, terabyte-sized image data sets of fruit fly, zebrafish and mouse embryos acquired with three types of fluorescence microscopes, (ii) scalability by analyzing advanced stages of development with up to 20,000 cells per time point at 26,000 cells min(-1) on a single computer workstation and (iii) ease of use by adjusting only two parameters across all data sets and providing visualization and editing tools for efficient data curation. Our approach achieves on average 97.0% linkage accuracy across all species and imaging modalities. Using our system, we performed the first cell lineage reconstruction of early Drosophila melanogaster nervous system development, revealing neuroblast dynamics throughout an entire embryo.

View Publication PageMammalian cerebral cortex is accepted as being critical for voluntary motor control, but what functions depend on cortex is still unclear. Here we used rapid, reversible optogenetic inhibition to test the role of cortex during a head-fixed task in which mice reach, grab, and eat a food pellet. Sudden cortical inhibition blocked initiation or froze execution of this skilled prehension behavior, but left untrained forelimb movements unaffected. Unexpectedly, kinematically normal prehension occurred immediately after cortical inhibition even during rest periods lacking cue and pellet. This 'rebound' prehension was only evoked in trained and food-deprived animals, suggesting that a motivation-gated motor engram sufficient to evoke prehension is activated at inhibition's end. These results demonstrate the necessity and sufficiency of cortical activity for enacting a learned skill.

View Publication PageAnimals discriminate stimuli, learn their predictive value and use this knowledge to modify their behavior. In Drosophila, the mushroom body (MB) plays a key role in these processes. Sensory stimuli are sparsely represented by ∼2000 Kenyon cells, which converge onto 34 output neurons (MBONs) of 21 types. We studied the role of MBONs in several associative learning tasks and in sleep regulation, revealing the extent to which information flow is segregated into distinct channels and suggesting possible roles for the multi-layered MBON network. We also show that optogenetic activation of MBONs can, depending on cell type, induce repulsion or attraction in flies. The behavioral effects of MBON perturbation are combinatorial, suggesting that the MBON ensemble collectively represents valence. We propose that local, stimulus-specific dopaminergic modulation selectively alters the balance within the MBON network for those stimuli. Our results suggest that valence encoded by the MBON ensemble biases memory-based action selection.

View Publication PageBehavioral choices that ignore prior experience promote exploration and unpredictability but are seemingly at odds with the brain's tendency to use experience to optimize behavioral choice. Indeed, when faced with virtual competitors, primates resort to strategic counterprediction rather than to stochastic choice. Here, we show that rats also use history- and model-based strategies when faced with similar competitors but can switch to a "stochastic" mode when challenged with a competitor that they cannot defeat by counterprediction. In this mode, outcomes associated with an animal's actions are ignored, and normal engagement of anterior cingulate cortex (ACC) is suppressed. Using circuit perturbations in transgenic rats, we demonstrate that switching between strategic and stochastic behavioral modes is controlled by locus coeruleus input into ACC. Our findings suggest that, under conditions of uncertainty about environmental rules, changes in noradrenergic input alter ACC output and prevent erroneous beliefs from guiding decisions, thus enabling behavioral variation.

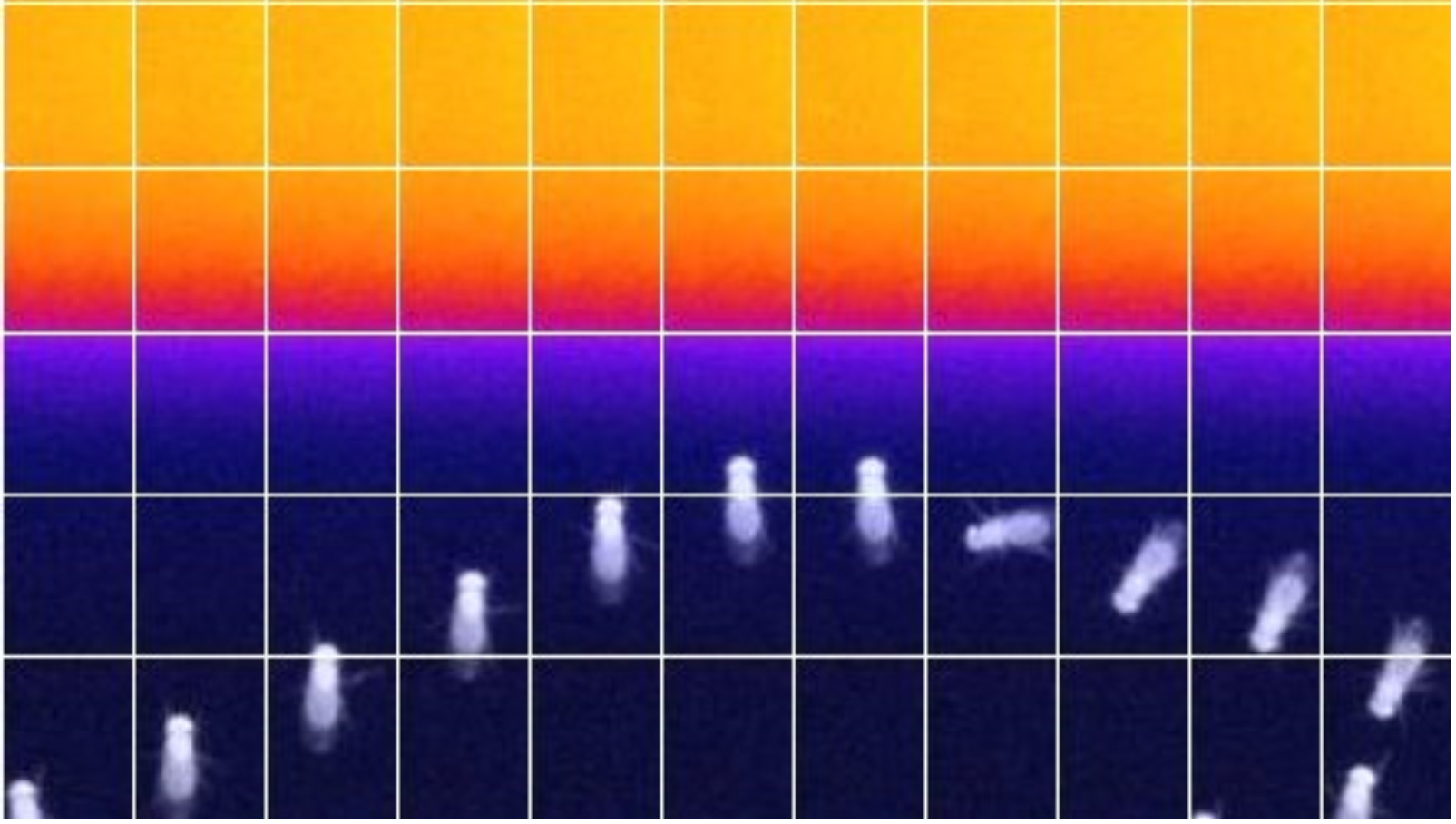

View Publication PageUnderstanding how the brain works in tight concert with the rest of the central nervous system (CNS) hinges upon knowledge of coordinated activity patterns across the whole CNS. We present a method for measuring activity in an entire, non-transparent CNS with high spatiotemporal resolution. We combine a light-sheet microscope capable of simultaneous multi-view imaging at volumetric speeds 25-fold faster than the state-of-the-art, a whole-CNS imaging assay for the isolated Drosophila larval CNS and a computational framework for analysing multi-view, whole-CNS calcium imaging data. We image both brain and ventral nerve cord, covering the entire CNS at 2 or 5 Hz with two- or one-photon excitation, respectively. By mapping network activity during fictive behaviours and quantitatively comparing high-resolution whole-CNS activity maps across individuals, we predict functional connections between CNS regions and reveal neurons in the brain that identify type and temporal state of motor programs executed in the ventral nerve cord.

View Publication PageThe training of deep neural networks is a high-dimension optimization problem with respect to the loss function of a model. Unfortunately, these functions are of high dimension and non-convex and hence difficult to characterize. In this paper, we empirically investigate the geometry of the loss functions for state-of-the-art networks with multiple stochastic optimization methods. We do this through several experiments that are visualized on polygons to understand how and when these stochastic optimization methods find minima.

View Publication Page