Filter

Associated Lab

- Ahrens Lab (1) Apply Ahrens Lab filter

- Aso Lab (4) Apply Aso Lab filter

- Branson Lab (53) Apply Branson Lab filter

- Card Lab (3) Apply Card Lab filter

- Cardona Lab (1) Apply Cardona Lab filter

- Dickson Lab (1) Apply Dickson Lab filter

- Dudman Lab (1) Apply Dudman Lab filter

- Fetter Lab (1) Apply Fetter Lab filter

- Freeman Lab (2) Apply Freeman Lab filter

- Harris Lab (1) Apply Harris Lab filter

- Heberlein Lab (1) Apply Heberlein Lab filter

- Jayaraman Lab (1) Apply Jayaraman Lab filter

- Karpova Lab (1) Apply Karpova Lab filter

- Keller Lab (3) Apply Keller Lab filter

- Otopalik Lab (1) Apply Otopalik Lab filter

- Pachitariu Lab (1) Apply Pachitariu Lab filter

- Reiser Lab (4) Apply Reiser Lab filter

- Rubin Lab (8) Apply Rubin Lab filter

- Simpson Lab (1) Apply Simpson Lab filter

- Svoboda Lab (1) Apply Svoboda Lab filter

- Tervo Lab (1) Apply Tervo Lab filter

- Truman Lab (1) Apply Truman Lab filter

- Turaga Lab (5) Apply Turaga Lab filter

- Zlatic Lab (1) Apply Zlatic Lab filter

Associated Project Team

Publication Date

- 2025 (4) Apply 2025 filter

- 2024 (4) Apply 2024 filter

- 2023 (2) Apply 2023 filter

- 2021 (2) Apply 2021 filter

- 2020 (3) Apply 2020 filter

- 2019 (3) Apply 2019 filter

- 2018 (5) Apply 2018 filter

- 2017 (7) Apply 2017 filter

- 2016 (5) Apply 2016 filter

- 2015 (6) Apply 2015 filter

- 2014 (6) Apply 2014 filter

- 2012 (3) Apply 2012 filter

- 2011 (1) Apply 2011 filter

- 2009 (1) Apply 2009 filter

- 2005 (1) Apply 2005 filter

Type of Publication

53 Publications

Showing 31-40 of 53 resultsThe training of deep neural networks is a high-dimension optimization problem with respect to the loss function of a model. Unfortunately, these functions are of high dimension and non-convex and hence difficult to characterize. In this paper, we empirically investigate the geometry of the loss functions for state-of-the-art networks with multiple stochastic optimization methods. We do this through several experiments that are visualized on polygons to understand how and when these stochastic optimization methods find minima.

We propose a framework for detecting action patterns from motion sequences and modeling the sensory-motor relationship of animals, using a generative recurrent neural network. The network has a discriminative part (classifying actions) and a generative part (predicting motion), whose recurrent cells are laterally connected, allowing higher levels of the network to represent high level phenomena. We test our framework on two types of data, fruit fly behavior and online handwriting. Our results show that 1) taking advantage of unlabeled sequences, by predicting future motion, significantly improves action detection performance when training labels are scarce, 2) the network learns to represent high level phenomena such as writer identity and fly gender, without supervision, and 3) simulated motion trajectories, generated by treating motion prediction as input to the network, look realistic and may be used to qualitatively evaluate whether the model has learnt generative control rules.

Naïve Bayes Nearest Neighbour (NBNN) is a simple and effective framework which addresses many of the pitfalls of K-Nearest Neighbour (KNN) classification. It has yielded competitive results on several computer vision benchmarks. Its central tenet is that during NN search, a query is not compared to every example in a database, ignoring class information. Instead, NN searches are performed within each class, generating a score per class. A key problem with NN techniques, including NBNN, is that they fail when the data representation does not capture perceptual (e.g. class-based) similarity. NBNN circumvents this by using independent engineered descriptors (e.g. SIFT). To extend its applicability outside of image-based domains, we propose to learn a metric which captures perceptual similarity. Similar to how Neighbourhood Components Analysis optimizes a differentiable form of KNN classification, we propose 'Class Conditional' metric learning (CCML), which optimizes a soft form of the NBNN selection rule. Typical metric learning algorithms learn either a global or local metric. However, our proposed method can be adjusted to a particular level of locality by tuning a single parameter. An empirical evaluation on classification and retrieval tasks demonstrates that our proposed method clearly outperforms existing learned distance metrics across a variety of image and non-image datasets.

Mice (Mus musculus) form large and dynamic social groups and emit ultrasonic vocalizations in a variety of social contexts. Surprisingly, these vocalizations have been studied almost exclusively in the context of cues from only one social partner, despite the observation that in many social species the presence of additional listeners changes the structure of communication signals. Here, we show that male vocal behavior elicited by female odor is affected by the presence of a male audience - with changes in vocalization count, acoustic structure and syllable complexity. We further show that single sensory cues are not sufficient to elicit this audience effect, indicating that multiple cues may be necessary for an audience to be apparent. Together, these experiments reveal that some features of mouse vocal behavior are only expressed in more complex social situations, and introduce a powerful new assay for measuring detection of the presence of social partners in mice.

In this review, we discuss the emerging field of computational behavioral analysis-the use of modern methods from computer science and engineering to quantitatively measure animal behavior. We discuss aspects of experiment design important to both obtaining biologically relevant behavioral data and enabling the use of machine vision and learning techniques for automation. These two goals are often in conflict. Restraining or restricting the environment of the animal can simplify automatic behavior quantification, but it can also degrade the quality or alter important aspects of behavior. To enable biologists to design experiments to obtain better behavioral measurements, and computer scientists to pinpoint fruitful directions for algorithm improvement, we review known effects of artificial manipulation of the animal on behavior. We also review machine vision and learning techniques for tracking, feature extraction, automated behavior classification, and automated behavior discovery, the assumptions they make, and the types of data they work best with. Expected final online publication date for the Annual Review of Neuroscience Volume 39 is July 08, 2016. Please see http://www.annualreviews.org/catalog/pubdates.aspx for revised estimates.

Metric learning seeks a transformation of the feature space that enhances prediction quality for a given task. In this work we provide PAC-style sample complexity rates for supervised metric learning. We give matching lower- and upper-bounds showing that sample complexity scales with the representation dimension when no assumptions are made about the underlying data distribution. In addition, by leveraging the structure of the data distribution, we provide rates fine-tuned to a specific notion of the intrinsic complexity of a given dataset, allowing us to relax the dependence on representation dimension. We show both theoretically and empirically that augmenting the metric learning optimization criterion with a simple norm-based regularization is important and can help adapt to a dataset’s intrinsic complexity yielding better generalization, thus partly explaining the empirical success of similar regularizations reported in previous works.

Mammalian cerebral cortex is accepted as being critical for voluntary motor control, but what functions depend on cortex is still unclear. Here we used rapid, reversible optogenetic inhibition to test the role of cortex during a head-fixed task in which mice reach, grab, and eat a food pellet. Sudden cortical inhibition blocked initiation or froze execution of this skilled prehension behavior, but left untrained forelimb movements unaffected. Unexpectedly, kinematically normal prehension occurred immediately after cortical inhibition even during rest periods lacking cue and pellet. This 'rebound' prehension was only evoked in trained and food-deprived animals, suggesting that a motivation-gated motor engram sufficient to evoke prehension is activated at inhibition's end. These results demonstrate the necessity and sufficiency of cortical activity for enacting a learned skill.

To investigate the fundamental question of how nervous systems encode, organize, and sequence behaviors, Kato et al. imaged neural activity with cellular resolution across the brain of the worm Caenorhabditis elegans. Locomotion behavior seems to be continuously represented by cyclical patterns of distributed neural activity that are present even in immobilized animals.

Understanding how the brain works in tight concert with the rest of the central nervous system (CNS) hinges upon knowledge of coordinated activity patterns across the whole CNS. We present a method for measuring activity in an entire, non-transparent CNS with high spatiotemporal resolution. We combine a light-sheet microscope capable of simultaneous multi-view imaging at volumetric speeds 25-fold faster than the state-of-the-art, a whole-CNS imaging assay for the isolated Drosophila larval CNS and a computational framework for analysing multi-view, whole-CNS calcium imaging data. We image both brain and ventral nerve cord, covering the entire CNS at 2 or 5 Hz with two- or one-photon excitation, respectively. By mapping network activity during fictive behaviours and quantitatively comparing high-resolution whole-CNS activity maps across individuals, we predict functional connections between CNS regions and reveal neurons in the brain that identify type and temporal state of motor programs executed in the ventral nerve cord.

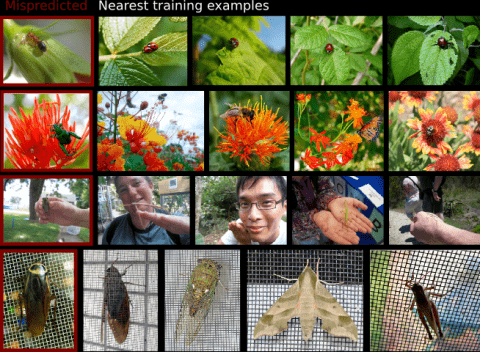

Modern supervised learning algorithms can learn very accurate and complex discriminating functions. But when these classifiers fail, this complexity can also be a drawback because there is no easy, intuitive way to diagnose why they are failing and remedy the problem. This important question has received little attention. To address this problem, we propose a novel method to analyze and understand a classifier's errors. Our method centers around a measure of how much influence a training example has on the classifier's prediction for a test example. To understand why a classifier is mispredicting the label of a given test example, the user can find and review the most influential training examples that caused this misprediction, allowing them to focus their attention on relevant areas of the data space. This will aid the user in determining if and how the training data is inconsistently labeled or lacking in diversity, or if the feature representation is insufficient. As computing the influence of each training example is computationally impractical, we propose a novel distance metric to approximate influence for boosting classifiers that is fast enough to be used interactively. We also show several novel use paradigms of our distance metric. Through experiments, we show that it can be used to find incorrectly or inconsistently labeled training examples, to find specific areas of the data space that need more training data, and to gain insight into which features are missing from the current representation. Code is available at https://github.com/kristinbranson/InfluentialNeighbors.